We are thrilled to announce the release of Bacalhau 1.2! Following the Bacalhau 1.1 release in September 2023, we’ve explored a variety of innovative and groundbreaking use cases, such as:

- Saving $2.5M Per Year by Managing Logs the AWS Way

- Edge-based Machine Learning Inference

- Distributed Warehousing Made Easy With Bacalhau

- How to Solve Edge Container Orchestration

We are also proud to announce that the U.S. Navy chose Bacalhau to manage predictive maintenance workloads!

But that’s just the start – Bacalhau 1.2 is packed with new features, enhancements, and bug fixes to improve your workflow and overall user experience.

Read on to learn more about these exciting updates! Or install straight away.

Job Templates

Users can now create and customize job templates to streamline their workflow by creating a large number of similar jobs more easily.

A job template contains named placeholders:

Name: docker job

Type: batch

Count: 1

Tasks:

- Name: main

Engine:

Type: docker

Params:

Image: ubuntu:latest

Entrypoint:

- /bin/bash

Parameters:

- -c

- echo {{.greeting}} {{.name}}

The placeholders will be filled in during a call to bacalhau job run with environment variables or command-line flags:

export greeting=Hello

bacalhau job run job.yaml --template-vars "name=World" --template-envs "*"

The templating functionality is based on the Go text/template package. This robust library offers a wide range of features for manipulating and formatting text based on template definitions and input variables. For more information about the Go text/template library and its syntax, you can refer to the official documentation: Go text/template Package.

Telemetry From Inside WASM Jobs With Dylibso Integration

This release introduces the ability to collect telemetry data from within WebAssembly (WASM) jobs via integration with the Dylibso Observe SDK.

Now, WebAssembly modules that have been automatically or manually instrumented will pass tracing information to any OTEL endpoints configured for Bacalhau to use. This happens automatically if OTEL is configured and the WASM is instrumented. Uninstrumented WASM continues to be run as normal with no required changes.

This allows WASM jobs to pass telemetry into Jaeger or any other configured OTEL client:

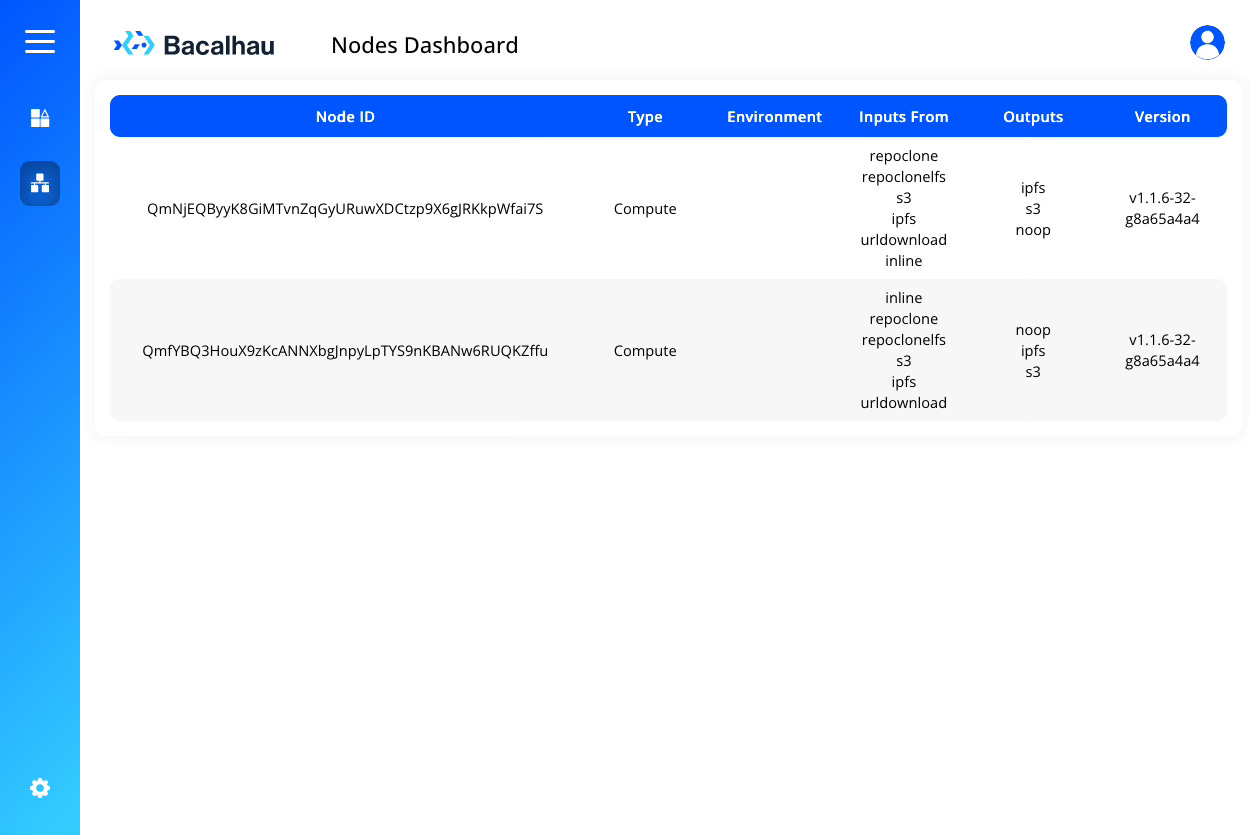

For the demonstration network, you can now visit

http://bootstrap.production.bacalhau.org

to see the dashboard in action.

It is simple to add the web UI to your own private cluster – just pass --web-ui to your bacalhau serve command or set Node.WebUI: yes in your config file. Once set Bacalhau will serve the web UI on port 80 automatically with no further configuration required.

Support for AMD and Intel GPUs

Users can now take advantage of the power of AMD and Intel GPUs for their computational tasks, in addition to our existing support for Nvidia GPUs.

Now, if a compute node has the AMD utlity rocm-smi or Intel utility xpu-smi installed, Bacalhau will automatically detect their GPUs at boot and make them available for use in Docker jobs.

Further, details on available GPUs are now available from the Nodes API. Calls to /api/v1/orchestrator/nodes now return extended GPU information that identifies the GPU’s vendor, available VRAM, and model name.

Support for Multiple GPUs in Docker Jobs

We now support the utilization of multiple GPUs in Docker jobs, enabling workload splitting and parallel processing.

Users can take advantage of multiple GPUs by passing the --gpu=... flag to bacalhau docker run or by specifying the number of GPUs in the Resources section of the job spec. The job will wait for the requested number of GPUs to become available at which point the job will have exclusive use of the GPUs for as long as it is executing.

Results Download From S3-Compatible Buckets

Users can now download results directly from S3-compatible buckets using bacalhau get, simplifying the data retrieval process.

The S3 Publisher will now generate pre-signed URLs on-demand for use by Bacalhau clients downloading job results without needing to provide credentials to the bucket itself. This requires the requester node to have appropriate IAM permissions for reading buckets.

Support for Google Cloud Buckets

Users can now seamlessly integrate Google Cloud Storage (GCS) buckets into their jobs, allowing for easy storage and retrieval of data.

To use GCS buckets, request data from a GCS storage endpoint or configure an S3 publisher using a [storage.googleapis.com](<http://storage.googleapis.com>) endpoint.

Programmatic API for Manipulating Config

Developers can now programmatically manipulate configuration settings using the new bacalhau config command, providing more flexibility and automation in managing Bacalhau nodes.

- Use

bacalhau config listto show the current state of configuration variables including defaults. - Use

bacalhau config set <key> <value>to permanently set a configuration option. - Use

bacalhau config defaultto generate a Bacalhau configuration file in YAML format without any user-specific overrides in place. - Use

bacalhau config auto-resourcesto detect available system capacity (CPU, RAM, Disk, GPUs, etc.) and write the capacity into the config file. Users can specify a number from 0-100 to limit the capacity to that percentage of total capacity. A number over 100 can also be used to overcommit on capacity, which is useful mainly for setting queue capacities.

Improvements and Bug Fixes

- Sanity checking of WebAssembly resource usage: we now check that WebAssembly jobs have requested less than the maximum possible RAM size of 4GB.

- Helpful messages when jobs fail to find nodes: improved error messaging provides users with the reason each node rejected or was not suitable for the job.

- Improvements to long-running jobs: long-running jobs now are not subject to execution timeouts and won’t be waited for when submitted on the CLI.

- Automatic notification of new software versions: users will now receive automatic notifications when new software versions are available, keeping them up to date with the latest features and improvements.

What’s Coming Next?

We have lots of new features coming in the next quarter. A selection of these items includes

- Easier bootstrapping – More easily establish a full network of Bacalhau nodes running across multiple regions and clouds.

- Native Python executor – A pluggable executor that can run raw Python against a Bacalhau network.

- Network queues – Jobs are queued (up to indefinitely) at the network level and scheduled only when resources become available. Fully supports all current scheduling constructs including all node metadata.

- Managed services – In a single action via our API, users can deploy a cluster to GCP, AWS, or Azure according to their zone/region criteria (new nodes, not existing ones).

- Rich, periodic node metadata – Nodes can provide custom metadata (maybe as labels, or maybe as something more structured) that can be configured to refresh to some custom schedule or as the result of a job.

How to Get Involved

We’re looking for help in several areas. If you’re interested in helping, there are several ways to contribute. Please reach out to us at any of the following locations.

Commercial Support

While Bacalhau is open-source software, the Bacalhau binaries go through the security, verification, and signing build process lovingly crafted by Expanso. You can read more about the difference between open source Bacalhau and commercially supported Bacalhau in our FAQ. If you would like to use our pre-built binaries and receive commercial support, please contact us!